From scoring in the 90th percentile on the multistate bar exam to creating keto-friendly recipes using only video footage of the inside of a developer’s refrigerator, Artificial intelligence (AI) software has grown exponentially more capable of mimicking human activity over the last six months.[i] In the same period, AI services have surged in popularity. OpenAI’s flagship chatbot ChatGPT, for example, became the fastest-growing consumer application in history, reaching 100 million monthly active users only two months after its November 2022 launch date.[ii] AI will likely be deeply integrated into every aspect of human life—if it has not already been—in the next few years. This includes military operations, which begs two imperative questions: what exactly can AI software do when weaponized, and what are the potential consequences of its existence within war, military operations, and national security?

Putting AI Into Perspective

Before answering these questions, it is important to outline the great distinction between AI and other technologies. As its name suggests, AI can receive information and produce outputs independently, allowing it to replicate reason and emotion—human brain functions—to develop certain opinions and behaviors. AI does this through a number of tools, including pattern detection, data mining, and natural language processing (NLP), which give it self-learning and correction capabilities. These capabilities allow AI to develop at astoundingly rapid rates. Experts have been tracking this development across three stages: artificial narrow intelligence (ANI, or weak AI), which is comparable to the mental scope and functionality of a human infant; artificial general intelligence (AGI, or strong AI), which is similar to that of an adult; and artificial super intelligence (ASI), which is far superior to human intelligence.[iii] The world approaches the ASI stage much faster than one might expect.

The earliest forms of weak AI trace back to the mid-20th century, when scientists such as Alan Turing first conceptualized the possibility of machinery that is capable of reason. Weak AI evolved from ELIZA, one of the world’s first chatbots, which debuted in the 1970s to chess programs that could defeat grandmasters in the 1990s and 2000s, and then to innovations such as IBM’s Watson and Apple’s Siri in the 2010s.[iv] Now, the world transitions toward the strong AI stage with the introduction of Generative Pre-trained Transformer (GPT) models, a class of AI that includes ChatGPT and driverless cars. Massive progress has been made related to AI’s input-receiving ability during this stage. Take the GPT-3 and GPT-4 models, for example: the former was first introduced in 2010 and is unimodal, so it could only understand text input, while the latter, created on March 14 of this year, is multimodal, accepting audio, visual, and analytical inputs. As such, GPT-4 vastly outperforms GPT-3 in a number of aptitude tests including the bar, GRE, SAT, and many AP exams.[v] AI will continue to develop beyond the AGI state, greatly surpassing the brain capability of humans as experts project AI to reach the ASI stage before 2050. It is clear why many call the era of AI the fourth industrial revolution; society has developed a tool with an unfathomable potential to “rupture the Earth’s long-held human-centric status quo.”[vi] State officials must recognize the groundbreaking nature of this technology, as its development and presence within their realm can no longer be ignored.

WarGPT – Weaponizing AI

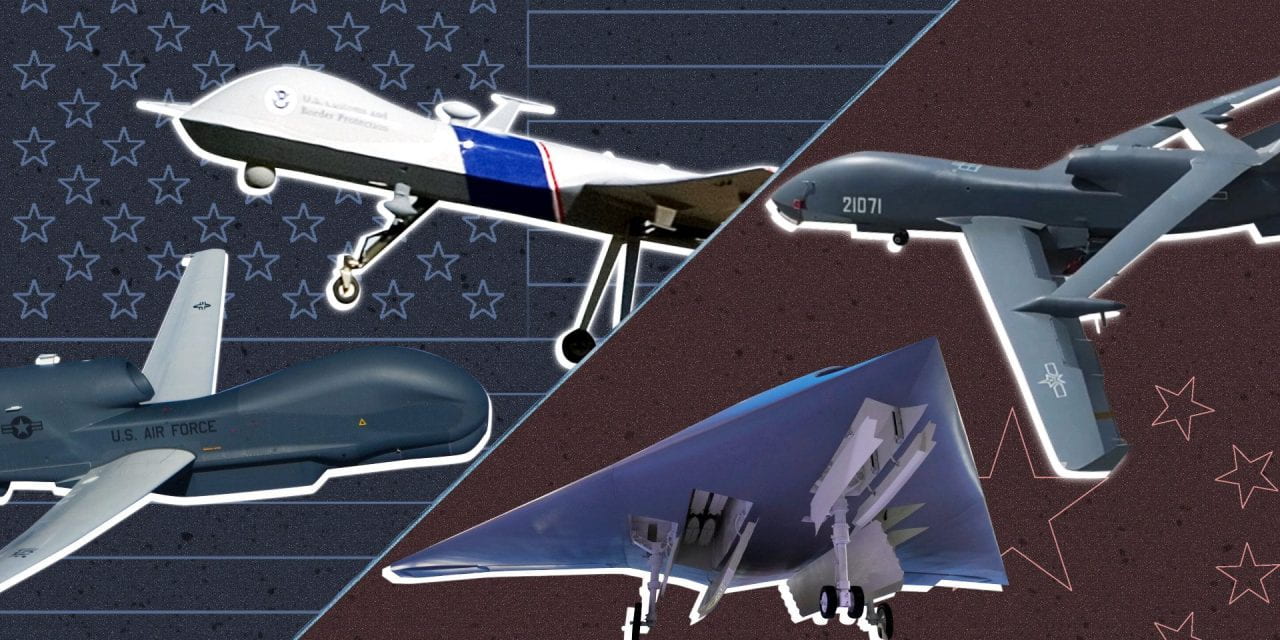

Several states around the world have already made AI an integral part of their military operations. Competing efforts to develop advanced AI technologies by the United States and China have been labeled as a ‘Digital Cold War’ by some, and AI facial recognition and targeting systems have already been deployed in the Russia-Ukraine War.[vii] In the US, personnel from all six branches of the military have utilized AI in training exercises.[viii] AI can enhance nearly every element of military efforts, from autonomous weapons systems with unprecedented levels of precision and lethality to data processing and research to cybersecurity, combat simulation, and much more. For example, fleets of military drones are equipped with AI technology that allows them to operate with swarm intelligence, not unlike that of bee hives or migratory birds. With swarm intelligence, drones act collectively, communicating vital information with one another and cooperating to achieve a certain objective—all with minimal human control.[ix] Within the next few years, Defense Advanced Research Projects Agency (DARPA) experts project that one individual will be able to control up to 250 drones and robots simply by directing them toward given target locations to survey and contain.[x] In addition, AI navigation systems can instantly identify the safest and fastest route to a given location, greatly facilitating transportation in difficult or contested locations.[xi] This technology will almost certainly strengthen militaries that use it, as AI can quickly process large batches of information, facilitate decision-making, and aid in threat monitoring. Nevertheless, a lack of accountability for the actions of AI systems creates major ethical and diplomatic concerns.

Military operatives follow strict chains of command and rules of engagement for a number of reasons. Whether to comply with international laws of war or to clearly identify, credit, or punish an individual responsible for an action, these vital institutions maintain structural integrity within a country’s military and ensure that all actions are informed, intentional, and legal. AI systems—unmanned weapons, for example—present a significant accountability gap, as they lack the agency and liability that human actors possess. If no primary operator for an AI weapon exists, uncertainty arises about who should be held responsible for its actions. Disputes will likely occur between system developers, programmers, and testers, which would further separate the machine from its human contributors.[xii] Moreover, no retribution exists for neglect within the US Laws of Armed Conflict (LOAC) in the same way that one is held liable for negligence in US civil law.[xiii] At present, this concern is hypothetical, but as strong AI technology continues to develop, preventing this scenario will become critically important for military operatives.

Currently, most AI-leveraging military equipment classifies as narrow AI technology, primarily consisting of basic machine learning and data processing functionality, and is not capable of employing the judgment needed to execute a strategic military decision. Goldfarb and Lindsay (2022) dwell on the implications of narrow AI on military operations, arguing that an increased dependence on AI makes human military operatives more valuable and predicting that information systems and command institutions will become more attractive targets for state rivals.[xiv] While their prediction about the value of command institutions may be true, Goldfarb and Lindsay too readily dismiss the possibility of strong AI in state militaries, basing their pessimism on projections that strong AI-powered technology would not be conceived for the next thirty to seventy years.[xv] As mentioned, AGI technology is arriving much sooner than anticipated; and speculation about its utilization within military affairs needs to be approached with more delicacy.

Conservative assumptions like Goldfarb and Lindsay’s about the timeline of AGI development have led to the disregard of discussions related to the required level of human presence within military AI. At an international summit co-hosted by the Netherlands and South Korea at The Hague in February, over 60 countries signed a call to action urging responsible use driven by a framework presented by the United States that recommended “appropriate levels of human judgment in the development, deployment, and use” of AI weapons systems but did not expand upon what the word “appropriate” meant in this context.[xvi] The US received criticism from Human Rights Watch for this omission, with international law professor Jessica Dorsey stating that the signed statement was too weak and lacked enforcement mechanisms.[xvii] State officials can assure the public that humans will be the ultimate decision-makers in a national security world, but advancements in AI technology might incentivize military operatives to delegate these critical tasks to machines.

Granting AI technology the autonomy to execute both operational and strategic level tasks presents significant speed advantages to militaries. Whereas strategic decision-making can be achieved by humans within hours or minutes, AI is capable of making critical decisions and acting on them in mere seconds. In the event that a state faces an immediate threat—an impending missile strike, hypothetically—time is extremely limited, which makes AI an appealing option for national defense. Additionally, a scenario where a defense system cannot communicate with humans for guidance presents another reason to consider the best way to institute permissions to AI for strategic decision-making purposes.[xviii] However, AI decision making still has its challenges: creating national security strategy requires immense knowledge of rapidly evolving, nuanced state relations and international trends, including the opinions and views of friendly and enemy state leaders. This information may not be available to AI through data mining.[xix] Accurate data available to AI might be sparse, and AI may not recognize which data to utilize in making decisions.[xx] As a result, AI-developed military strategy cannot be depended upon to be reliable. Otherwise, states risk suffering disastrous consequences.

As demonstrated by the 1983 Petrov incident, where Soviet early-warning nuclear systems falsely detected an incoming strike from the US, human instinct could be the difference between global peace and nuclear war.[xxi] Shifting away from human dependence by transitioning to AI military functionality—especially within nuclear states—will likely be viewed by others as a step toward destabilization, and create a domino effect internationally. Arms races, nuclear system development and automation, hypersensitivity to missile detection, and the renouncement of no first use policies might all occur in reaction to AI dependence.[xxii] Ultimately, this could lead to increased tensions between global powers at a time when Russia, NATO, the US, and China are at odds with each other. If states do not convene to ratify a clear and enforceable framework for the development and use of military AI, none of these outcomes—including nuclear war—are out of the question.

Conclusion and Recommendation

AI dependence within military operations has increased considerably in the last decade, though many within the international community have not truly grappled with the ramifications of this trend. While much of the current research on AI is speculative, the international community must not disregard its current capabilities and near-term potential to rival the intelligence of humans as an appealing alternative to human decisionmakers in national defense strategy. As such, state officials must convene to establish clear, thorough, and binding regulations related to AI military use. Otherwise, state leaders will almost certainly face a deterioration of interstate relations, a global hesitation to commit to nuclear peace agreements, and rising tensions as automated actors become increasingly present in critical military actions.

References

[i] Daniel Martin Katz et al., “GPT-4 Passes the Bar Exam,” SSRN Electronic Journal, March 15, 2023, https://doi.org/10.2139/ssrn.4389233; Mckay Wrigley, “I Used My iPhone to Give GPT-4 Eyes. ,” Twitter, May 2, 2023, https://twitter.com/mckaywrigley/status/1653464294493921280?s=12&t=u3R-HN1-krotHaK9qWSY7A.

[ii] Krystal Hu, “CHATGPT Sets Record for Fastest-Growing User Base – Analyst Note,” Reuters, February 2, 2023, https://www.reuters.com/technology/chatgpt-sets-record-fastest-growing-user-base-analyst-note-2023-02-01/.

[iii] UBS, “A New Dawn,” UBS Artificial Intelligence, 2023, https://www.ubs.com/microsites/artificial-intelligence/en/new-dawn.html#:~:text=AI%20is%20divided%20broadly%20into,artificial%20super%20intelligence%20(ASI).

[iv] Rockwell Anyoha, “The History of Artificial Intelligence,” Science in the News Boston, August 28, 2017, https://sitn.hms.harvard.edu/flash/2017/history-artificial-intelligence/.

[v] Claudia Slowik and Filip Kaiser, “GPT-4 vs. GPT-3. OpenAI Models’ Comparison,” Neoteric, April 26, 2023, https://neoteric.eu/blog/gpt-4-vs-gpt-3-openai-models-comparison/.

[vi] UBS, “A New Dawn.”

[vii] Marc Champion, “How U.S..-China Tech Rivalry Looks like a Digital Cold War,” Bloomberg, May 17, 2019, https://www.bloomberg.com/quicktake/how-u-s-china-tech-rivalry-looks-like-a-digital-cold-war; Stu Woo, “The U.S. vs. China: The High Cost of the Technology Cold War,” The Wall Street Journal, November 2, 2020, https://www.wsj.com/articles/the-u-s-vs-china-the-high-cost-of-the-technology-cold-war-11603397438: Toby Sterling, “U.S., China, Other Nations Urge ‘Responsible’ Use of Military AI,” Reuters, February 16, 2023, https://www.reuters.com/business/aerospace-defense/us-china-other-nations-urge-responsible-use-military-ai-2023-02-16/.

[viii] David Vergun, “Artificial Intelligence, Autonomy Will Play Crucial Role in Warfare, General Says,” U.S. Department of Defense, February 8, 2022, https://www.defense.gov/News/News-Stories/Article/Article/2928194/artificial-intelligence-autonomy-will-play-crucial-role-in-warfare-general-says/.

[ix] Sentient Digital, Inc., “Military Applications of AI in 2023,” Sentient Digital, Inc., January 31, 2023, https://sdi.ai/blog/the-most-useful-military-applications-of-ai/#:~:text=Warfare%20systems%20such%20as%20weapons,systems%20may%20require%20less%20maintenance.

[x] DARPA, “Offensive Swarm-Enabled Tactics (Offset) Third Field Experiment,” YouTube, January 27, 2020, https://www.youtube.com/watch?v=zrFiuNgOQJo.

[xi] Sentient Digital, Inc., “Military Applications of AI in 2023.”

[xii] Forrest Morgan et al., “Military Applications of Artificial Intelligence: Ethical Concerns in an Uncertain World,” RAND Corporation, 2020, 32–34, https://doi.org/10.7249/rr3139-1.

[xiii] Ibid.

[xiv] Avi Goldfarb and Jon R. Lindsay, “Prediction and Judgment: Why Artificial Intelligence Increases the Importance of Humans in War,” International Security 46, no. 3 (February 25, 2022): 7–50, https://doi.org/10.1162/isec_a_00425

[xv] Ibid.

[xvi] Bureau of Arms Control, Verification, and Compliance, “Political Declaration on Responsible Military Use of Artificial Intelligence and Autonomy,” U.S. Department of State, February 16, 2023, https://www.state.gov/political-declaration-on-responsible-military-use-of-artificial-intelligence-and-autonomy/.

[xvii] Toby Sterling, “U.S., China, Other Nations Urge ‘Responsible’ Use of Military AI.”

[xviii] Mara Karlin, “The Implications of Artificial Intelligence for National Security Strategy,” Brookings, March 9, 2022, https://www.brookings.edu/research/the-implications-of-artificial-intelligence-for-national-security-strategy/.

[xix] Ibid.

[xx] Avi Goldfarb and Jon Lindsay, “Artificial Intelligence in War: Human Judgment as an Organizational Strength and a Strategic Liability,” Brookings, March 9, 2022, https://www.brookings.edu/research/artificial-intelligence-in-war-human-judgment-as-an-organizational-strength-and-a-strategic-liability/.

[xxi] Pavel Aksenov, “Stanislav Petrov: The Man Who May Have Saved the World,” BBC News, September 26, 2013, https://www.bbc.com/news/world-europe-24280831.

[xxii] Vincent Boulanin, “AI & Global Governance: AI and Nuclear Weapons – Promise and Perils of AI for Nuclear Stability,” United Nations University Centre for Policy Research, 2018, https://cpr.unu.edu/publications/articles/ai-global-governance-ai-and-nuclear-weapons-promise-and-perils-of-ai-for-nuclear-stability.html.